Research Area: Augmented Reality (AR)

-

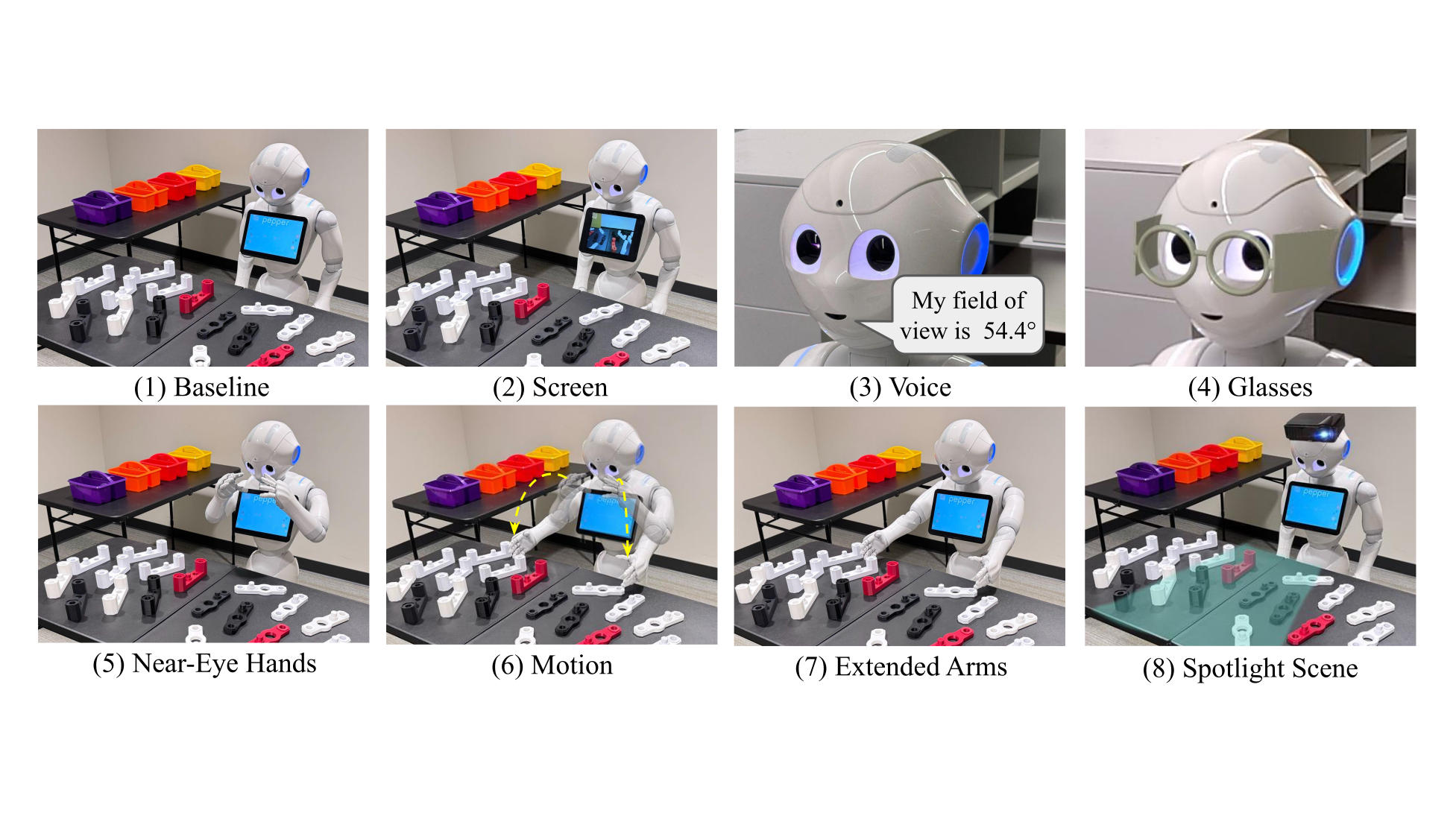

Indicating Robot Vision Capabilities with Augmented Reality

Abstract Research indicates that humans can mistakenly assume that robots and humans have the same field of view, possessing an inaccurate mental model of robots. This misperception may lead to failures during human-robot collaboration tasks where robots might be asked to complete impossible tasks about out-of-view objects. The issue is more severe when robots do…

-

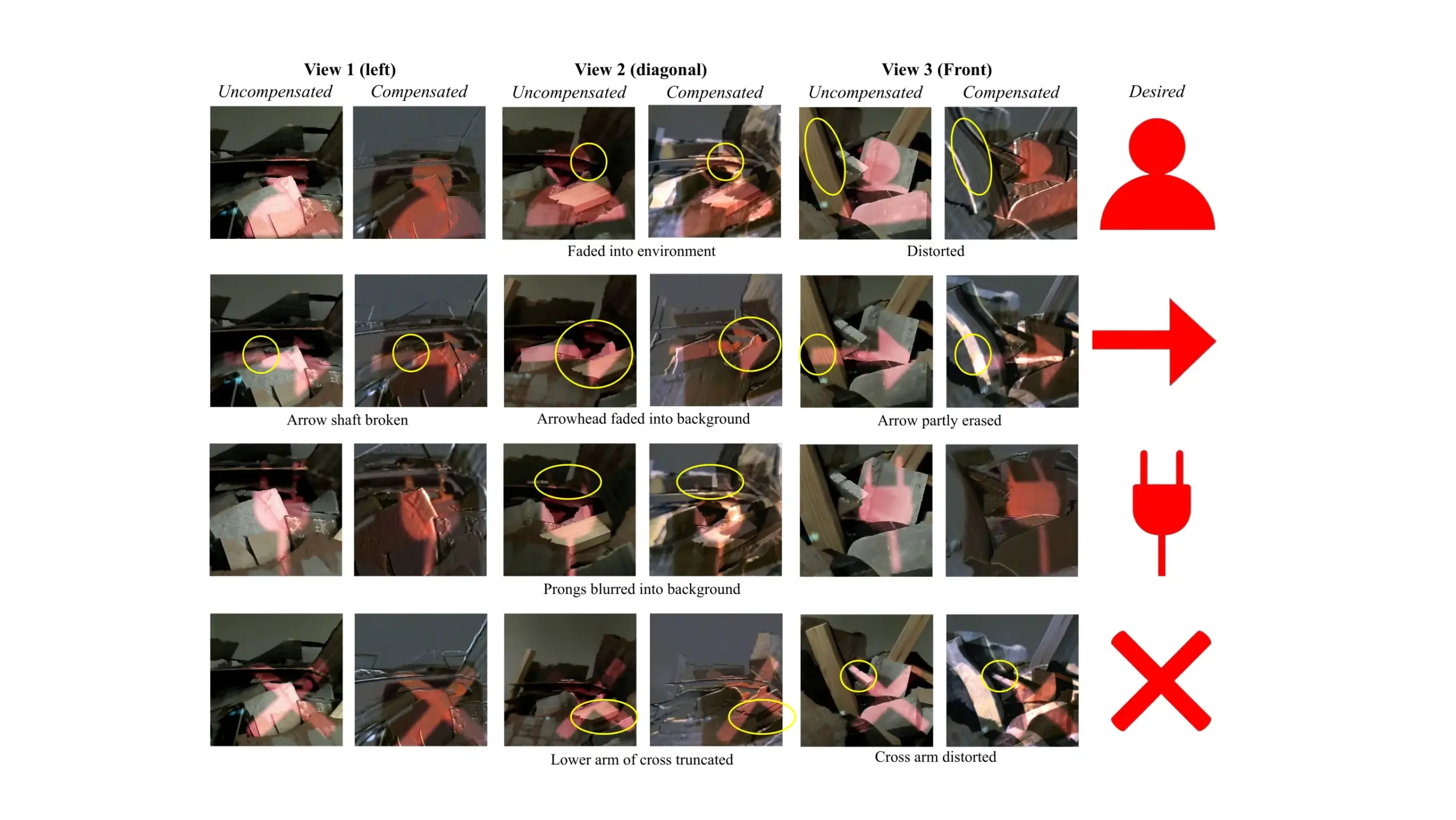

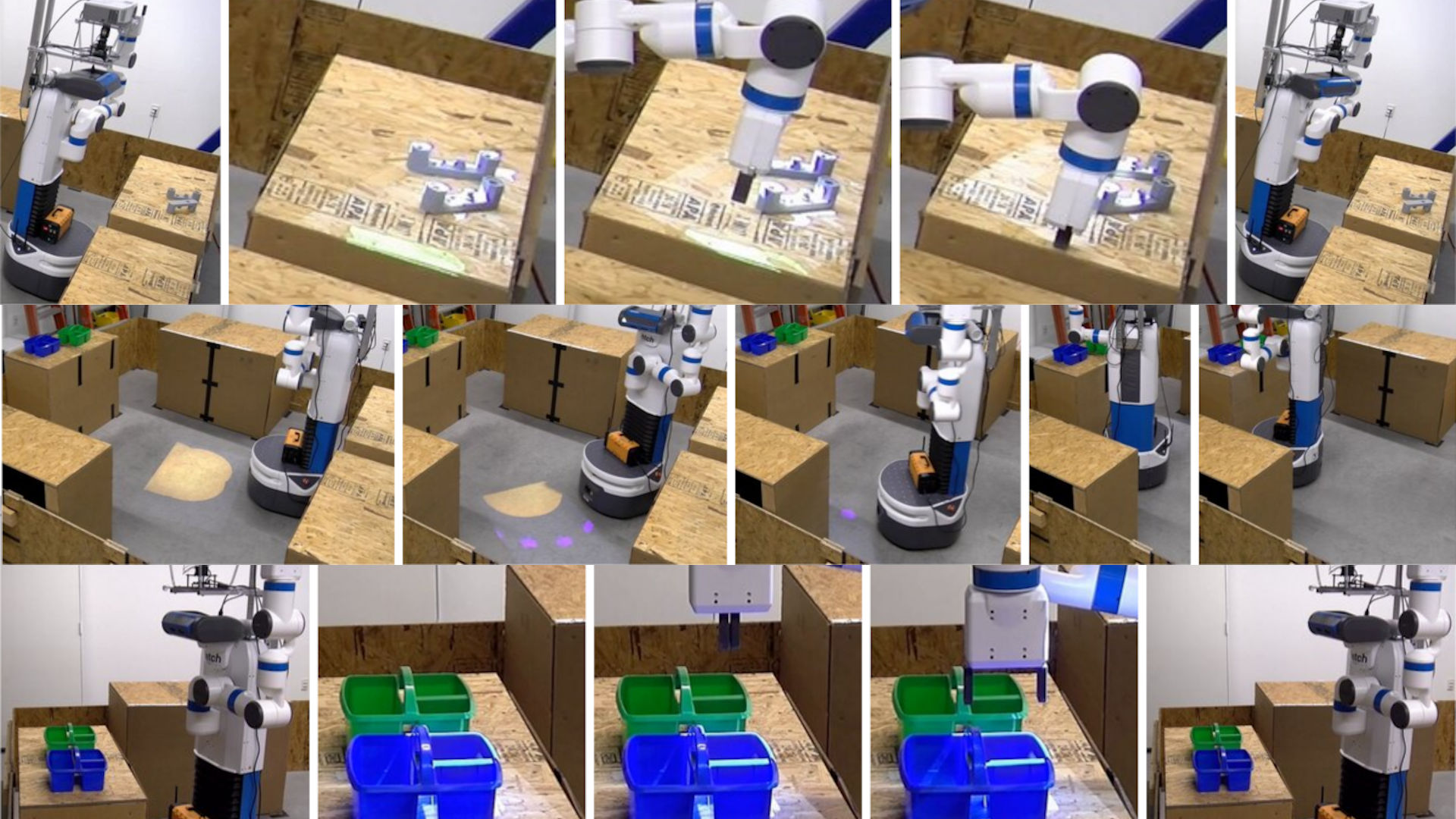

Evaluating Dynamic Surface Compensation for Robots with Projected AR

Abstract Projector-based augmented reality (AR) enables robots to communicate spatially-situated information to multiple observers without requiring head-mounted displays, e.g., projecting navigation path. However, they require flat and weakly textured projection surfaces; otherwise, the surface needs to be compensated to retain the original projected image. Yet, existing compensation methods assume static projector-camera-surface configurations and may not…

-

Givenness Hierarchy Theoretic Sequencing of Robot Task Instructions

Abstract Introduction: When collaborative robots teach human teammates new tasks, they must carefully determine the order to explain different parts of the task. In robotics, this problem is especially challenging, due to the situated and dynamic nature of robot task instruction. Method: In this work, we consider how robots can leverage the Givenness Hierarchy to…

-

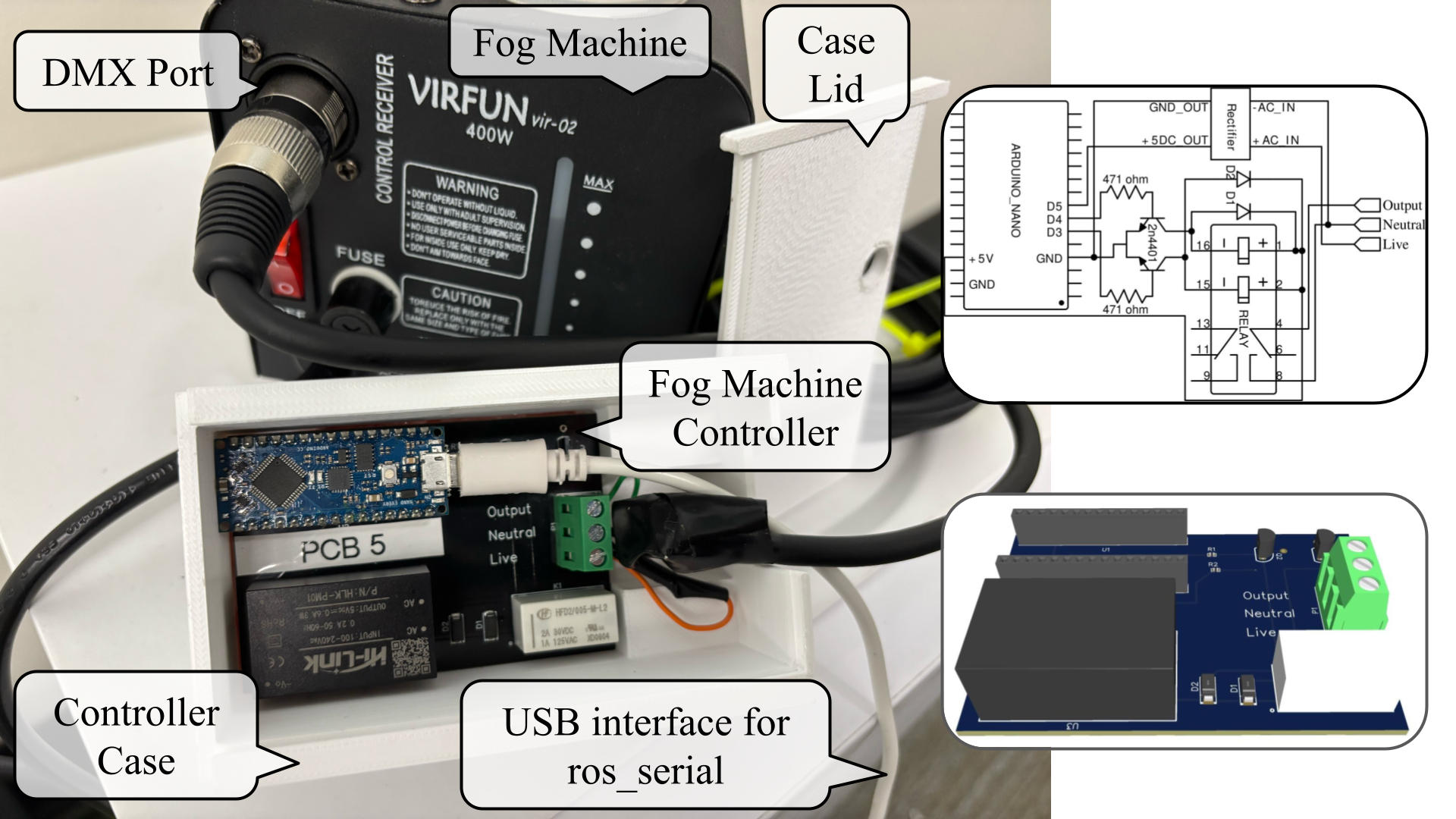

A Controller for Robots to Autonomously Control Fog Machine

Abstract Typical fog machines need manual activation and human monitoring. This creates a problem that robots cannot interface with those fog machines to autonomously controll it for potential augmented reality (AR) applications, e.g., augmented to a fog screen. To solve this issue, we replaced the fog machine’s manual remote with a custom PCB containing an…

-

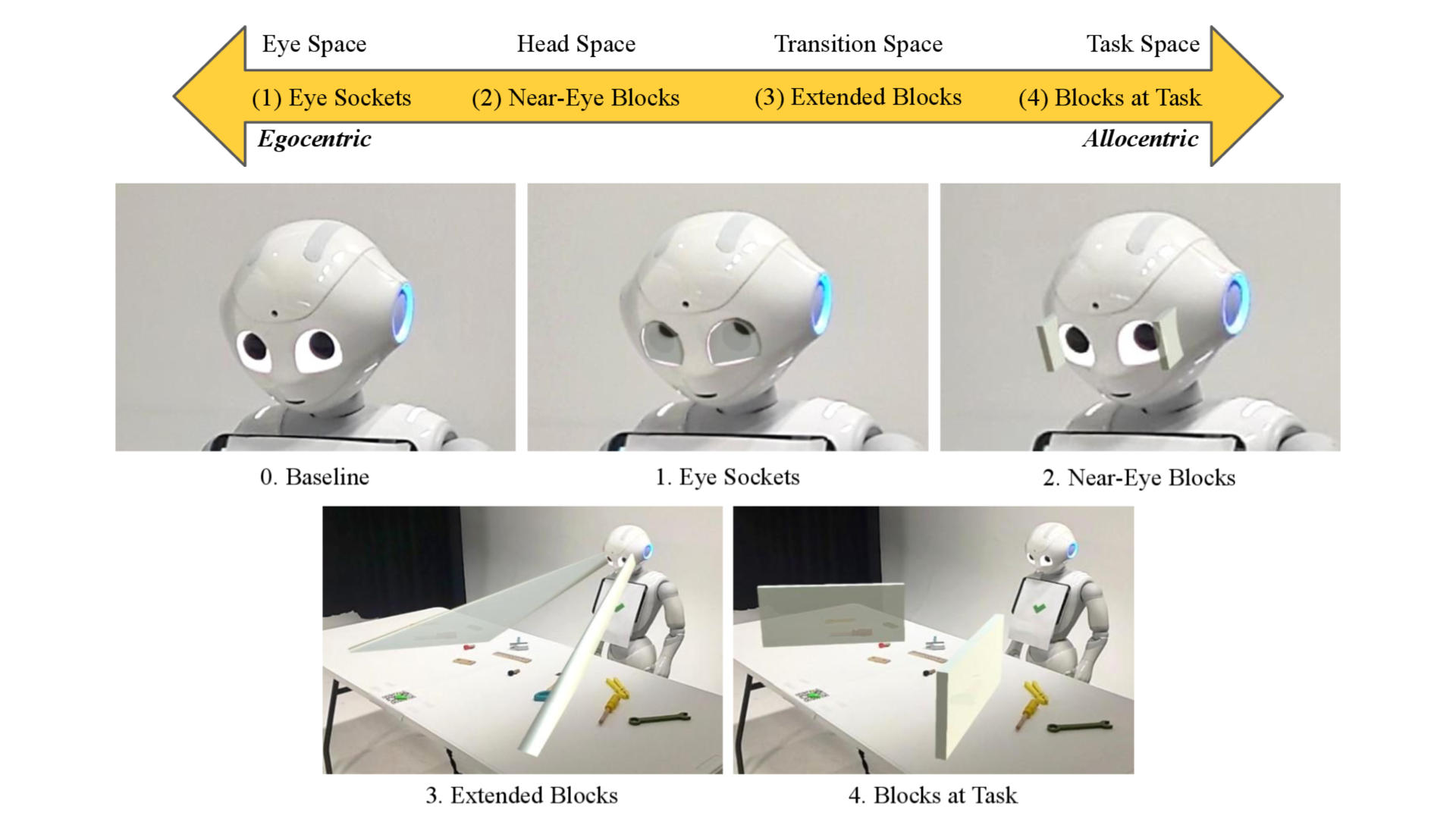

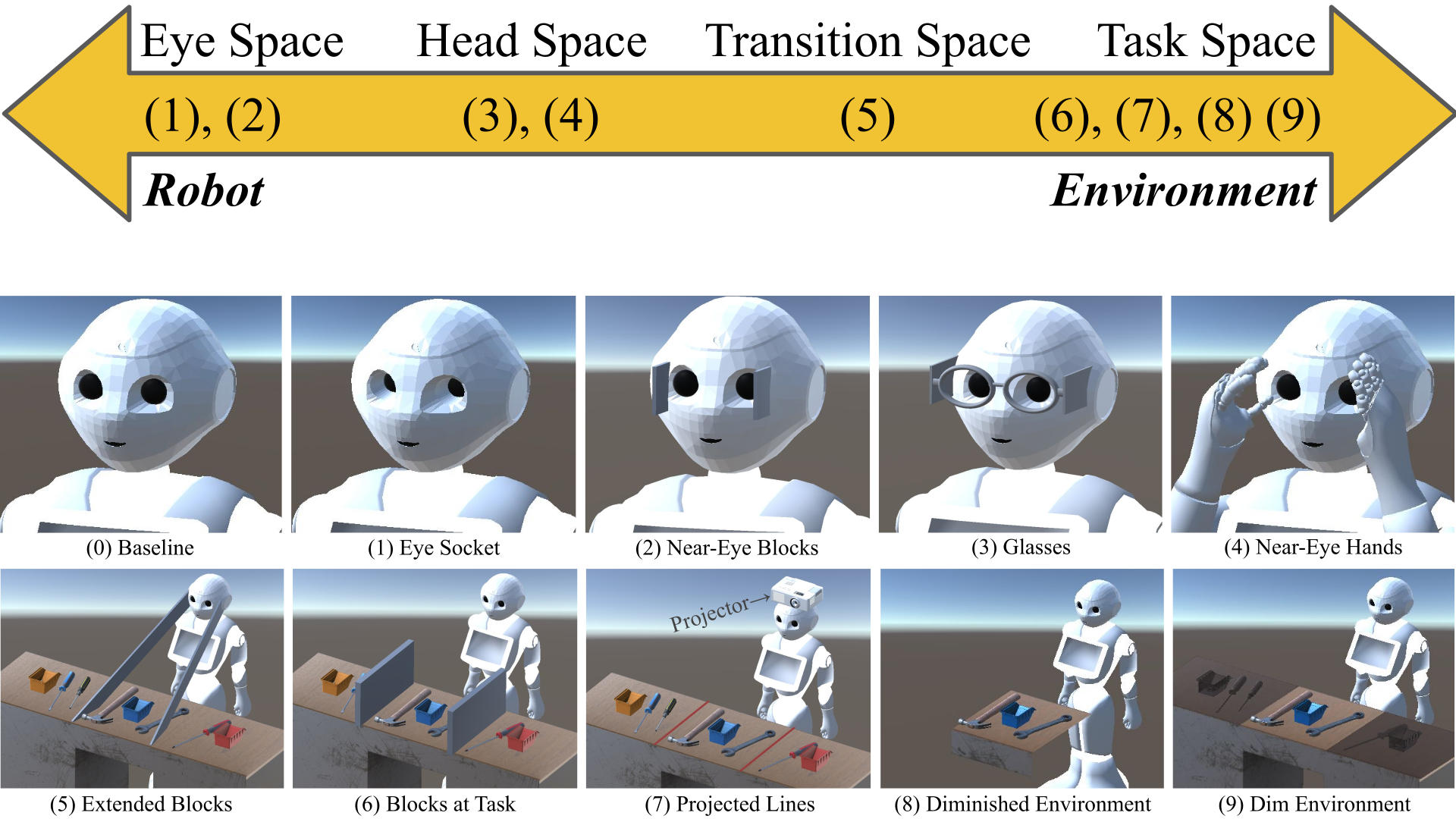

Exploring Familiar Design Strategies to Explain Robot Vision Capabilities

Abstract Humans often assume that robots share the same field of view (FoV) as themselves, given their human-like appearance. In reality, robots have a much narrower FoV (e.g., Pepper robot’s 54.4∘ and Fetch robot’s 54∘) than humans’ 180∘, leading to misaligned mental models and reduced efficiency in collaborative tasks. For instance, a user might place…

-

Anywhere Projected AR for Robot Communication: A Mid-Air Fog Screen-Robot System

Abstract Augmented reality (AR) allows visualizations to be situated where they are relevant, e.g., in a robot’s operating environment or task space. Yet, headset-based AR suffers a scalability issue because every viewer must wear a headset. Projector-based spatial AR solves this problem by projecting augmentations onto the scene, e.g., recognized objects or navigation paths, viewable…

-

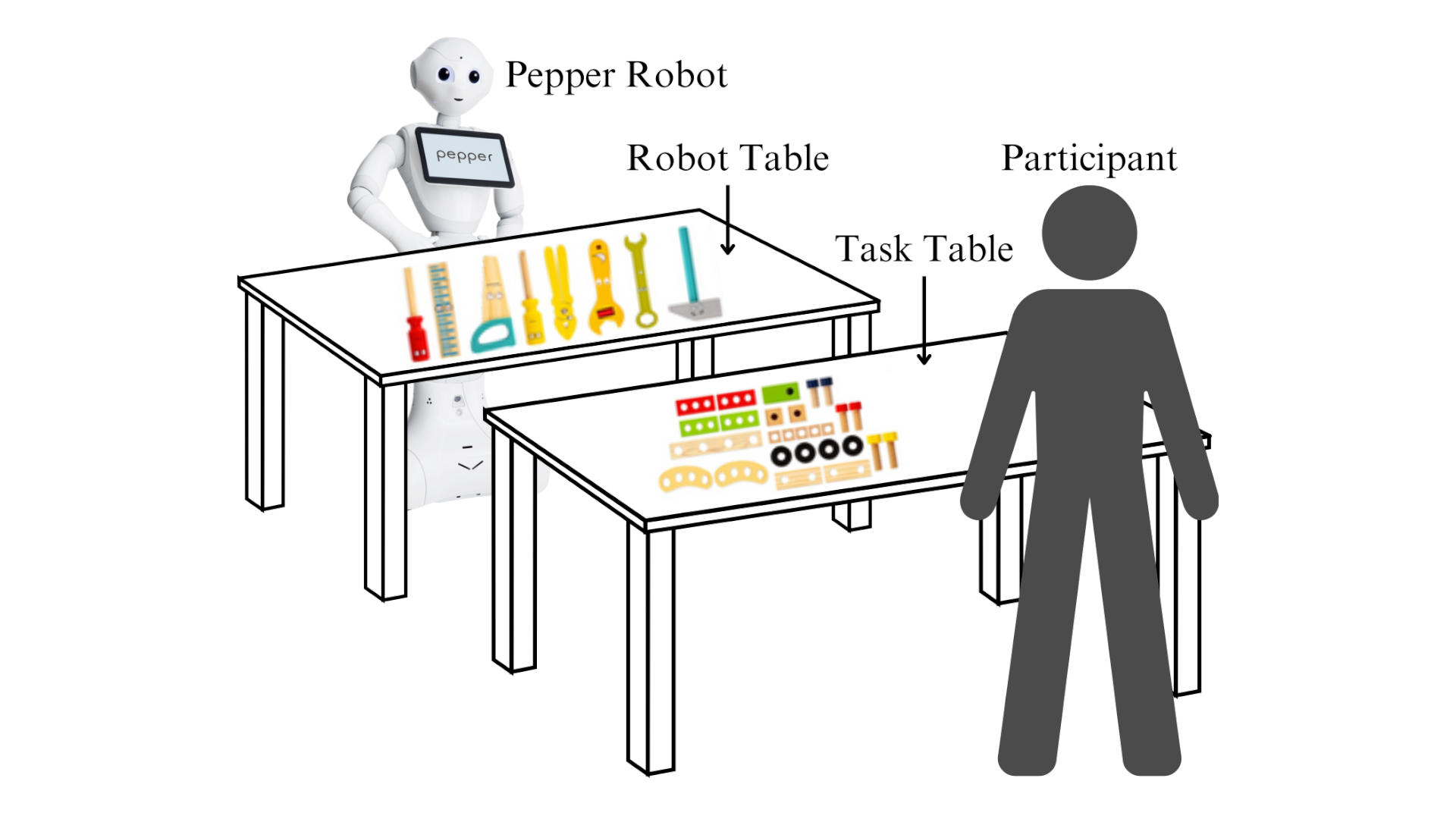

Do Results in Experiments with Virtual Robots in Augmented Reality Transfer To Physical Robots? An Experiment Design

Abstract Entry to human-robot interaction research, e.g., conducting empirical experiments, faces a significant economic barrier due to the high cost of physical robots, ranging from thousands to tens of thousands, if not millions. This cost issue also severely limits the field’s ability to replicate user studies and reproduce the results to verify their reliability, thus…

-

To Understand Indicators of Robots’ Vision Capabilities

Abstract Study [10] indicates that humans can mistakenly assume that robots and humans have the same field of view (FoV), possessing an inaccurate mental model of a robot. This misperception is problematic during collaborative HRI tasks where robots might be asked to complete impossible tasks about out-of-view objects. To help align humans’ mental models of…

-

Designing Indicators to Show a Robot’s Physical Vision Capability

Abstract In human-robot interaction (HRI), studies show humans can mistakenly assume that robots and humans have the same field of view, possessing an inaccurate mental model of a robot. This misperception is problematic during collaborative HRI tasks where robots might be asked to complete impossible tasks about out-of-view objects. In this initial work, we aim…

-

Communicating Missing Causal Information to Explain a Robot’s Past Behavior

-

Best of Both Worlds? Combining Different Forms of Mixed Reality Deictic Gestures

-

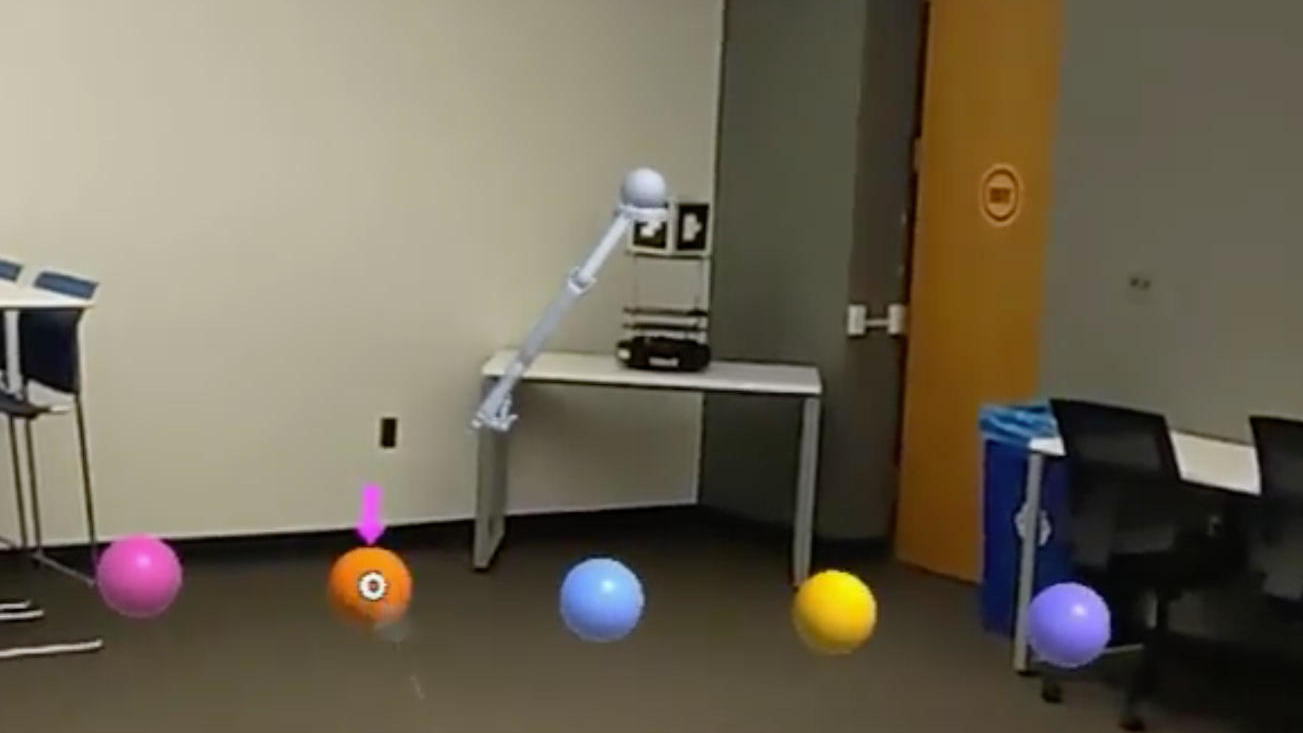

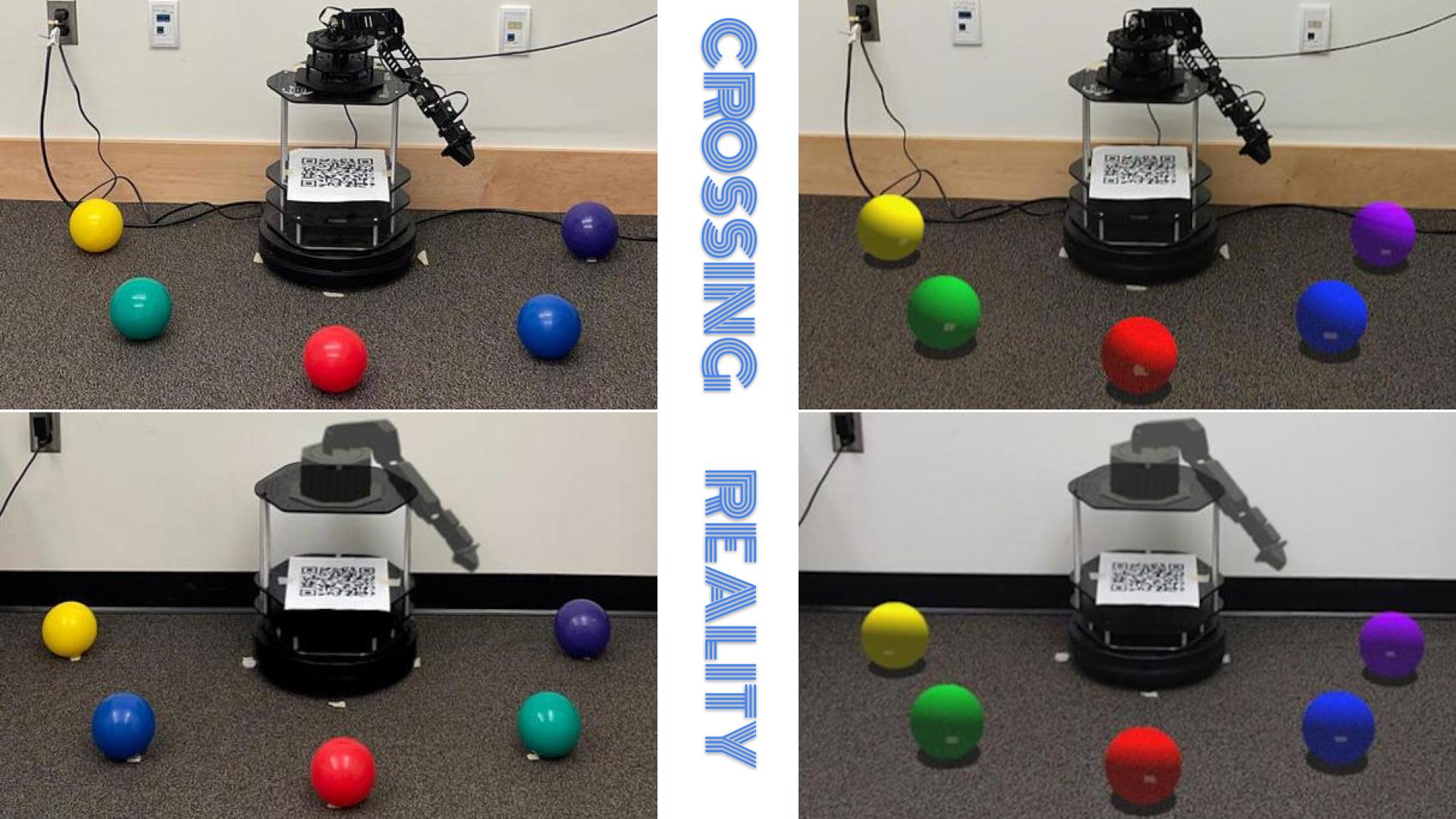

Crossing Reality: Comparing Physical and Virtual Robot Deixis

-

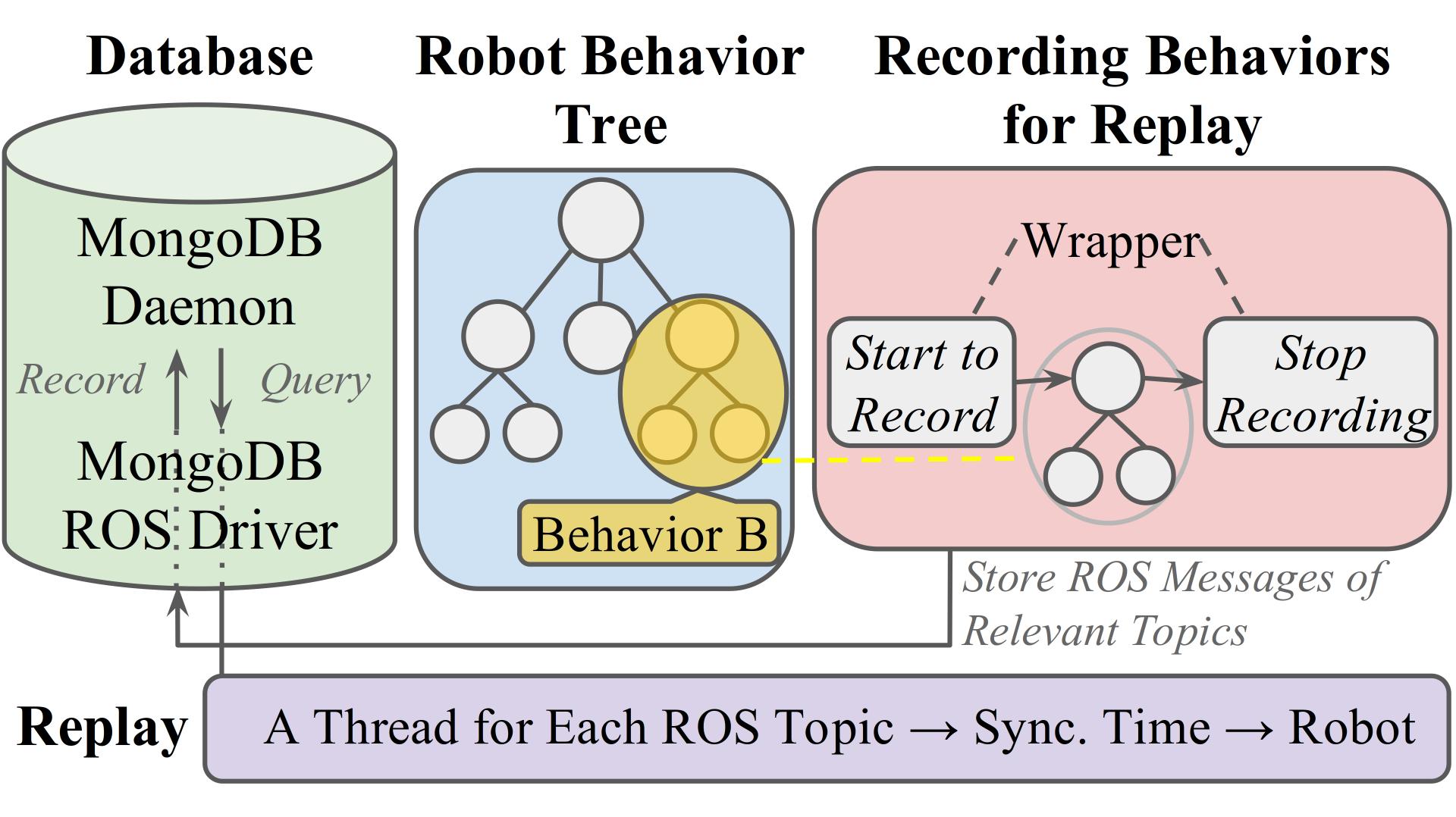

Mixed-Reality Robot Behavior Replay: A System Implementation

-

Towards an Understanding of Physical vs Virtual Robot Appendage Design

-

Projecting Robot Navigation Paths: Hardware and Software for Projected AR

-

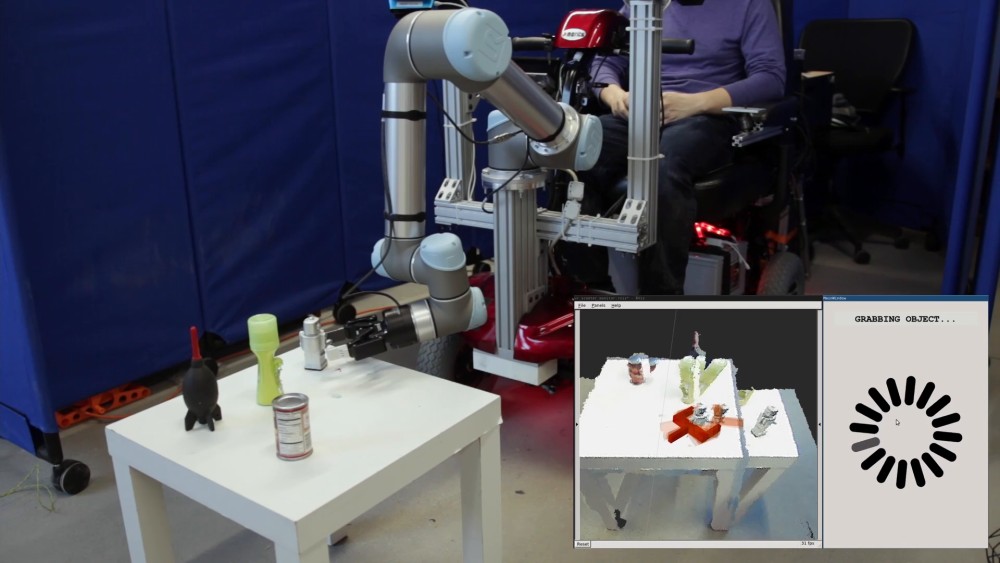

Design Guidelines for Human-Robot Interaction with Assistive Robot Manipulation Systems

-

Projection Mapping Implementation: Enabling Direct Externalization of Perception Results and Action Intent to Improve Robot Explainability